By Kirsten Cosser

The topic of artificial intelligence (AI) has dominated the news cycle in 2023 . Central to the debate have been questions about what the technology will mean for the online information ecosystem. Concerns about election tampering , inaccurate health advice and impersonation are rife. And we have started to see examples that warrant these fears.

AI-generated images depicting political events that never happened are already in circulation . Some appear to show an explosion at the Pentagon, the headquarters of the US’s Department of Defense. Others show former US president Donald Trump being arrested and French president Emmanual Macron caught up in a protest .

More innocuous examples have tended to be even more convincing , like images of Pope Francis, head of the Catholic church, wearing a stylish puffer jacket.

Altered images and videos being used to spread false information is nothing new. In some ways, methods for detecting this kind of deception remain the same.

But AI technologies do pose some unique challenges in the fight against falsehood – and they’re getting better fast. Knowing how to spot this content will be a useful skill as we increasingly come into contact with media that might have been tampered with or generated using AI. Here are some things to look out for in images and videos online.

Clues within the image or video itself Tools that use AI to generate or manipulate content work in different ways, and leave behind different clues in the content. As the technology improves, though, fewer mistakes will be made. The clues discussed below are things that are distinguishable at the time of writing, but may not always be.

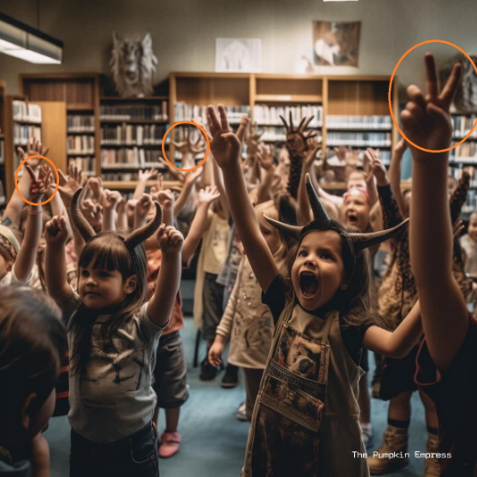

Check for a watermark or disclaimer When it comes to AI-generated images, the devil is in the details. In May 2023, a series of images circulated on social media in South Africa showing young children in a library, surrounded by satanic imagery. In the images, the children are in a library, sitting on the floor around a pentagram, the five-pointed star often associated with the occult, being read to by demonic-looking figures or wearing costumes and horns. The scenes were dubbed the “Baphomet Book Club” by some social media users reposting the images.

A number of users, not realising the images were AI-generated, expressed their outrage. This example shows how easy it is to create images that appear realistic at first glance. It also shows how easily social media users can be fooled, even if that wasn’t the intention of the creator of the images. The user who first uploaded the images to the internet, who goes by “The Pumpkin Empress”, included a watermark with this account name in the images, though this was later removed in some of the posts.

Watermarks are designs added to something to show its authenticity or verify where it comes from. Banknotes , for example, include watermarks as a sign of authenticity. Large, visible watermarks are also placed over images on stock photo websites to prevent their use before purchase.

A quick Google search for “The Pumpkin Empress” would have led to Instagram, Twitter and Facebook accounts filled with similar AI-generated images, clearly posted by someone with a penchant for creating demonic-themed AI art, rather than documenting a real phenomenon.

This is the first major clue. Sometimes there are subtle errors in content made by AI tools, but sometimes there’s actual text or some other watermark that can quickly reveal that the images are not real photos. Although these are easy to remove later, and someone who is deliberately trying to fool you is unlikely to put a revealing watermark on an image, it’s always important to look for this as a first step.

For images Jean le Roux is a research associate at the Atlantic Council’s Digital Forensic Research Lab . He studies technologies like AI and how they relate to disinformation. He told Africa Check that because of the way the algorithms work in current AI-powered image generators, these tools struggle to perfectly recreate certain common features of photos.

One classic example is hands. These tools initially really struggled with hands. Human figures appeared to have warped, missing or more fingers than expected. But this once-telltale sign has swiftly become less reliable as the generators have improved.

(Source: The Pumpkin Empress via Facebook. AI-generated)

The AI tools have the same issue with teeth as with hands, so you should still look carefully at other details of human faces and bodies. Le Roux also suggested looking out for mismatched eyes and any missing, distorted, melting or even doubled facial features or limbs.

But some clues also come down to something just feeling “off” or strange at first glance, similar to the Uncanny Valley effect , where something that looks very humanlike but isn’t completely convincing evokes a feeling of discomfort. According to a guide from fact-checkers at Agence France-Presse (AFP), skin that appears too smooth or polished, or has a platicky sheen, might also be a sign that an image is AI-generated.

(Source: The Pumpkin Empress via Facebook. AI-generated)

Accessories, AFP wrote , can also be red flags. Look out for glasses or jewellery that look as though they’ve melted into a person’s face. AI models often struggle to make accessories symmetrical and may show people wearing mismatched earrings or glasses with oddly shaped frames.

Apart from human figures, looking at the details of text, signs or the background in the image could be useful. Le Roux also noted that these tools struggled where there were repeating patterns, such as buttons on a phone or stitching on material.

But, as AI expert Henry Ajder told news site Deutsche Welle , it might be harder to spot AI-generated landscape scenes than images of human beings. If you are looking at a landscape, your best bet might be to compare the details in the image to photos you can confirm were taken in the place that the image is meant to represent.

(Source: The Pumpkin Empress via Facebook. AI-generated)

In summary, pay special attention to:

Accessories (earrings, glasses)Backgrounds (odd or melting shapes or people)Body parts (missing fingers, extra body parts)Patterns (repeated small objects like device buttons, patterns on fabric, or a person’s teeth)Textures (skin sheen, or textures melting, blending or repeating in an unnatural-looking way)Lighting and shadows

For videos Deepfakes are videos that have been manipulated using tools to combine or generate human faces and bodies. These audiovisual manipulations run on a spectrum from true deepfakes, which closely resemble reality and require specialised tools, to “cheap fakes”, which can be as simple as altering the audio in a video clip to obscure some words in a political speech, and therefore changing its meaning. In these cases, finding the original unedited video can be enough to disprove a false claim.

Although realistic deepfakes might be difficult to detect, especially without specialised skills, there are often clues in cheap fakes that you can look out for, depending on what has been done, whether lip-synching, superimposing a different face onto a body or changing the video speed.

Le Roux told Africa Check that there are often clues in the audio, like the voice or accent sounding “off”. There might also be issues with body movements, especially where cheaper or free software is used. Sometimes still images are used with animated mouths or heads superimposed on them, which means there is a suspicious lack of movement in the rest of the body. A general Uncanny Valley effect is also a clue, which might translate in subtle details like the lighting, skin colour or shadows seeming unnatural.

These clues are all identifiable in a video of South African president Cyril Ramaphosa that circulated on social media in March 2023. In the video, Ramaphosa, who appeared to be addressing the country, outlined a government plan to address the ongoing energy crisis by demolishing the Voortrekker Monument and the Loftus Versfeld rugby stadium , both in the country’s administrative capital city of Pretoria, to make way for new “large-scale diesel generators”.

But the president looked oddly static, with his face and body barely moving as he spoke. His voice and accent also likely sounded strange to most South Africans, and it was crudely overlaid onto mouth movements that didn’t perfectly match up to the words.

Look at facial details . Pay special attention to mouth and body movement, eyes (are they moving or blinking?) and skin details.Listen to the voice carefully . Does it sound robotic or unnatural? Does the accent match other recordings of the person speaking? Is the voice aligned with mouth movements? Does it sound like it’s been slowed down or sped up?Inspect the background . Does it match the lighting and general look of the body and face? Does it make sense in the context of the video?

Context is everything As technology advances and it becomes more difficult to detect fake images or videos, people will need to rely on other tools to separate fact from fiction. Thankfully these involve going back to the basics by looking at how an image or video fits into the world more broadly.

Whether something is a convincing, resource-intensive deepfake, or a quick cheap fake, the basic principles of detecting misinformation through context still apply.

1. Think about the overall message or feeling being conveyed . According to Le Roux, looking at context comes down to asking yourself: “What is this website trying to tell me? What are these social media accounts trying to push?”

Try to identify the emotions the content is trying to evoke. Does it seem like it’s intended to make you angry, outraged or disgusted? This might be a red flag.

2. What are other people saying? It’s often useful to look outside of your immediate reaction to see what other people think. Read the comments to a social media post, as these can give some good clues, but also look at what trustworthy sources are saying. If what is being depicted is an event with a big impact (say, the president demolishing a famous cultural monument to make way for a power station), it will almost certainly have received news coverage by major outlets. If it hasn’t, or if the suspicious content contradicts what reliable news outlets or scientific bodies are saying, that might be a clue that it’s misleading.

3. Google or Bing or DuckDuckGo. Do a search! If you have a sneaking suspicion something might not be entirely accurate (or even if you don’t), see what other information is available online about it. If a video depicts an event, say Trump being arrested, type this into a search engine.

If you think a video might have been edited in some way to change its meaning, see if you can find the original video. This might be possible by searching for the name of the event or some words relating to it (for example, “Ramaphosa address to the nation April 2023”).

You might find a reverse search works better. Though generally used for images, there are easy ways to reverse search videos. Tools like this one from InVid do this for you, using screenshots from the video.

4. Compare with real images or videos. Just as you would use visual clues to work out where a photo was taken , try to identify details that you can compare with accurate information. If an image is meant to show a real person or place, then details like facial features, haircuts, landscapes and other details should match real life.

Source JAMLAB

/*! elementor - v3.29.0 - 19-05-2025 */

.elementor-button,

.e-btn,

#elementor-deactivate-feedback-modal .dialog-skip,

#elementor-deactivate-feedback-modal .dialog-submit {

font-size: 12px;

font-weight: 500;

line-height: 1.2;

padding: 8px 16px;

outline: none;

border: none;

border-radius: var(--e-a-border-radius);

background-color: var(--e-a-btn-bg);

color: var(--e-a-btn-color-invert);

transition: var(--e-a-transition-hover);

}

.elementor-button:hover,

.e-btn:hover,

#elementor-deactivate-feedback-modal .dialog-skip:hover,

#elementor-deactivate-feedback-modal .dialog-submit:hover {

border: none;

}

.elementor-button:hover, .elementor-button:focus,

.e-btn:hover,

#elementor-deactivate-feedback-modal .dialog-skip:hover,

#elementor-deactivate-feedback-modal .dialog-submit:hover,

.e-btn:focus,

#elementor-deactivate-feedback-modal .dialog-skip:focus,

#elementor-deactivate-feedback-modal .dialog-submit:focus {

background-color: var(--e-a-btn-bg-hover);

color: var(--e-a-btn-color-invert);

}

.elementor-button:active,

.e-btn:active,

#elementor-deactivate-feedback-modal .dialog-skip:active,

#elementor-deactivate-feedback-modal .dialog-submit:active {

background-color: var(--e-a-btn-bg-active);

}

.elementor-button:not([disabled]),

.e-btn:not([disabled]),

#elementor-deactivate-feedback-modal .dialog-skip:not([disabled]),

#elementor-deactivate-feedback-modal .dialog-submit:not([disabled]) {

cursor: pointer;

}

.elementor-button:disabled,

.e-btn:disabled,

#elementor-deactivate-feedback-modal .dialog-skip:disabled,

#elementor-deactivate-feedback-modal .dialog-submit:disabled {

background-color: var(--e-a-btn-bg-disabled);

color: var(--e-a-btn-color-disabled);

}

.elementor-button:not(.elementor-button-state) .elementor-state-icon,

.e-btn:not(.elementor-button-state) .elementor-state-icon,

#elementor-deactivate-feedback-modal .dialog-skip:not(.elementor-button-state) .elementor-state-icon,

#elementor-deactivate-feedback-modal .dialog-submit:not(.elementor-button-state) .elementor-state-icon {

display: none;

}

.elementor-button.e-btn-txt, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-cancel,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-cancel,

.e-btn.e-btn-txt,

#elementor-deactivate-feedback-modal .dialog-skip,

#elementor-deactivate-feedback-modal .e-btn-txt.dialog-submit,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.dialog-button.dialog-cancel,

.dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-cancel.dialog-submit,

#elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-submit,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.dialog-button.dialog-cancel,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-cancel.dialog-submit,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-submit {

background: transparent;

color: var(--e-a-color-txt);

}

.elementor-button.e-btn-txt:hover, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-cancel:hover,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-cancel:hover, .elementor-button.e-btn-txt:focus, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-cancel:focus,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-cancel:focus,

.e-btn.e-btn-txt:hover,

#elementor-deactivate-feedback-modal .dialog-skip:hover,

#elementor-deactivate-feedback-modal .e-btn-txt.dialog-submit:hover,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.dialog-button.dialog-cancel:hover,

.dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-cancel.dialog-submit:hover,

#elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-submit:hover,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.dialog-button.dialog-cancel:hover,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-cancel.dialog-submit:hover,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-submit:hover,

.e-btn.e-btn-txt:focus,

#elementor-deactivate-feedback-modal .dialog-skip:focus,

#elementor-deactivate-feedback-modal .e-btn-txt.dialog-submit:focus,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.dialog-button.dialog-cancel:focus,

.dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-cancel.dialog-submit:focus,

#elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-submit:focus,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.dialog-button.dialog-cancel:focus,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-cancel.dialog-submit:focus,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-submit:focus {

background: var(--e-a-bg-hover);

color: var(--e-a-color-txt-hover);

}

.elementor-button.e-btn-txt:disabled, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-cancel:disabled,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-cancel:disabled,

.e-btn.e-btn-txt:disabled,

#elementor-deactivate-feedback-modal .dialog-skip:disabled,

#elementor-deactivate-feedback-modal .e-btn-txt.dialog-submit:disabled,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.dialog-button.dialog-cancel:disabled,

.dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-cancel.dialog-submit:disabled,

#elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-submit:disabled,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.dialog-button.dialog-cancel:disabled,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-cancel.dialog-submit:disabled,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-submit:disabled {

background: transparent;

color: var(--e-a-color-txt-disabled);

}

.elementor-button.e-btn-txt-border,

.e-btn.e-btn-txt-border,

#elementor-deactivate-feedback-modal .e-btn-txt-border.dialog-skip,

#elementor-deactivate-feedback-modal .e-btn-txt-border.dialog-submit {

border: 1px solid var(--e-a-color-txt-muted);

}

.elementor-button.elementor-button-success, .elementor-button.e-success,

.e-btn.elementor-button-success,

#elementor-deactivate-feedback-modal .elementor-button-success.dialog-skip,

#elementor-deactivate-feedback-modal .elementor-button-success.dialog-submit,

.e-btn.e-success,

#elementor-deactivate-feedback-modal .e-success.dialog-skip,

#elementor-deactivate-feedback-modal .e-success.dialog-submit {

background-color: var(--e-a-btn-bg-success);

}

.elementor-button.elementor-button-success:hover, .elementor-button.elementor-button-success:focus, .elementor-button.e-success:hover, .elementor-button.e-success:focus,

.e-btn.elementor-button-success:hover,

#elementor-deactivate-feedback-modal .elementor-button-success.dialog-skip:hover,

#elementor-deactivate-feedback-modal .elementor-button-success.dialog-submit:hover,

.e-btn.elementor-button-success:focus,

#elementor-deactivate-feedback-modal .elementor-button-success.dialog-skip:focus,

#elementor-deactivate-feedback-modal .elementor-button-success.dialog-submit:focus,

.e-btn.e-success:hover,

#elementor-deactivate-feedback-modal .e-success.dialog-skip:hover,

#elementor-deactivate-feedback-modal .e-success.dialog-submit:hover,

.e-btn.e-success:focus,

#elementor-deactivate-feedback-modal .e-success.dialog-skip:focus,

#elementor-deactivate-feedback-modal .e-success.dialog-submit:focus {

background-color: var(--e-a-btn-bg-success-hover);

}

.elementor-button.e-primary, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-take_over,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-take_over, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-ok,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-ok,

.e-btn.e-primary,

#elementor-deactivate-feedback-modal .e-primary.dialog-skip,

#elementor-deactivate-feedback-modal .dialog-submit,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.dialog-button.dialog-take_over,

.dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-take_over.dialog-skip,

#elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-take_over.dialog-skip,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.dialog-button.dialog-take_over,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-take_over.dialog-skip,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-take_over.dialog-skip,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.dialog-button.dialog-ok,

.dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-ok.dialog-skip,

#elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-ok.dialog-skip,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.dialog-button.dialog-ok,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-ok.dialog-skip,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-ok.dialog-skip {

background-color: var(--e-a-btn-bg-primary);

color: var(--e-a-btn-color);

}

.elementor-button.e-primary:hover, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-take_over:hover,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-take_over:hover, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-ok:hover,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-ok:hover, .elementor-button.e-primary:focus, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-take_over:focus,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-take_over:focus, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-ok:focus,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-ok:focus,

.e-btn.e-primary:hover,

#elementor-deactivate-feedback-modal .e-primary.dialog-skip:hover,

#elementor-deactivate-feedback-modal .dialog-submit:hover,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.dialog-button.dialog-take_over:hover,

.dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-take_over.dialog-skip:hover,

#elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-take_over.dialog-skip:hover,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.dialog-button.dialog-take_over:hover,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-take_over.dialog-skip:hover,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-take_over.dialog-skip:hover,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.dialog-button.dialog-ok:hover,

.dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-ok.dialog-skip:hover,

#elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-ok.dialog-skip:hover,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.dialog-button.dialog-ok:hover,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-ok.dialog-skip:hover,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-ok.dialog-skip:hover,

.e-btn.e-primary:focus,

#elementor-deactivate-feedback-modal .e-primary.dialog-skip:focus,

#elementor-deactivate-feedback-modal .dialog-submit:focus,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.dialog-button.dialog-take_over:focus,

.dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-take_over.dialog-skip:focus,

#elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-take_over.dialog-skip:focus,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.dialog-button.dialog-take_over:focus,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-take_over.dialog-skip:focus,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-take_over.dialog-skip:focus,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.dialog-button.dialog-ok:focus,

.dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-ok.dialog-skip:focus,

#elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-ok.dialog-skip:focus,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.dialog-button.dialog-ok:focus,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-ok.dialog-skip:focus,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-ok.dialog-skip:focus {

background-color: var(--e-a-btn-bg-primary-hover);

color: var(--e-a-btn-color);

}

.elementor-button.e-primary.e-btn-txt, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.e-btn-txt.dialog-button.dialog-take_over,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.e-btn-txt.dialog-button.dialog-take_over, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.e-primary.dialog-button.dialog-cancel, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-cancel.dialog-take_over,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.e-primary.dialog-button.dialog-cancel,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-cancel.dialog-take_over, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.e-btn-txt.dialog-button.dialog-ok, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-ok.dialog-cancel,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.e-btn-txt.dialog-button.dialog-ok,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-ok.dialog-cancel,

.e-btn.e-primary.e-btn-txt,

#elementor-deactivate-feedback-modal .e-primary.dialog-skip,

#elementor-deactivate-feedback-modal .e-btn-txt.dialog-submit,

#elementor-deactivate-feedback-modal .dialog-submit.dialog-skip,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.e-btn-txt.dialog-button.dialog-take_over,

.dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-take_over.dialog-skip,

#elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-take_over.dialog-skip,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.e-btn-txt.dialog-button.dialog-take_over,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-take_over.dialog-skip,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-take_over.dialog-skip,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.e-primary.dialog-button.dialog-cancel,

.dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-cancel.dialog-submit,

#elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-submit,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.dialog-button.dialog-cancel.dialog-take_over,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.e-primary.dialog-button.dialog-cancel,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-cancel.dialog-submit,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-submit,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.dialog-button.dialog-cancel.dialog-take_over,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.e-btn-txt.dialog-button.dialog-ok,

.dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-ok.dialog-skip,

#elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-ok.dialog-skip,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.dialog-button.dialog-ok.dialog-cancel,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.e-btn-txt.dialog-button.dialog-ok,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-ok.dialog-skip,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-ok.dialog-skip,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.dialog-button.dialog-ok.dialog-cancel {

background: transparent;

color: var(--e-a-color-primary-bold);

}

.elementor-button.e-primary.e-btn-txt:hover, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.e-btn-txt.dialog-button.dialog-take_over:hover,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.e-btn-txt.dialog-button.dialog-take_over:hover, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.e-primary.dialog-button.dialog-cancel:hover, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-cancel.dialog-take_over:hover,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.e-primary.dialog-button.dialog-cancel:hover,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-cancel.dialog-take_over:hover, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.e-btn-txt.dialog-button.dialog-ok:hover, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-ok.dialog-cancel:hover,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.e-btn-txt.dialog-button.dialog-ok:hover,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-ok.dialog-cancel:hover, .elementor-button.e-primary.e-btn-txt:focus, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.e-btn-txt.dialog-button.dialog-take_over:focus,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.e-btn-txt.dialog-button.dialog-take_over:focus, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.e-primary.dialog-button.dialog-cancel:focus, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-cancel.dialog-take_over:focus,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.e-primary.dialog-button.dialog-cancel:focus,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-cancel.dialog-take_over:focus, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.e-btn-txt.dialog-button.dialog-ok:focus, .dialog-type-confirm .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-ok.dialog-cancel:focus,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.e-btn-txt.dialog-button.dialog-ok:focus,

.dialog-type-alert .dialog-buttons-wrapper .elementor-button.dialog-button.dialog-ok.dialog-cancel:focus,

.e-btn.e-primary.e-btn-txt:hover,

#elementor-deactivate-feedback-modal .e-primary.dialog-skip:hover,

#elementor-deactivate-feedback-modal .e-btn-txt.dialog-submit:hover,

#elementor-deactivate-feedback-modal .dialog-submit.dialog-skip:hover,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.e-btn-txt.dialog-button.dialog-take_over:hover,

.dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-take_over.dialog-skip:hover,

#elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-take_over.dialog-skip:hover,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.e-btn-txt.dialog-button.dialog-take_over:hover,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-take_over.dialog-skip:hover,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-take_over.dialog-skip:hover,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.e-primary.dialog-button.dialog-cancel:hover,

.dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-cancel.dialog-submit:hover,

#elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-submit:hover,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.dialog-button.dialog-cancel.dialog-take_over:hover,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.e-primary.dialog-button.dialog-cancel:hover,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-cancel.dialog-submit:hover,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-submit:hover,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.dialog-button.dialog-cancel.dialog-take_over:hover,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.e-btn-txt.dialog-button.dialog-ok:hover,

.dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-ok.dialog-skip:hover,

#elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-ok.dialog-skip:hover,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.dialog-button.dialog-ok.dialog-cancel:hover,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.e-btn-txt.dialog-button.dialog-ok:hover,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-ok.dialog-skip:hover,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-ok.dialog-skip:hover,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.dialog-button.dialog-ok.dialog-cancel:hover,

.e-btn.e-primary.e-btn-txt:focus,

#elementor-deactivate-feedback-modal .e-primary.dialog-skip:focus,

#elementor-deactivate-feedback-modal .e-btn-txt.dialog-submit:focus,

#elementor-deactivate-feedback-modal .dialog-submit.dialog-skip:focus,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.e-btn-txt.dialog-button.dialog-take_over:focus,

.dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-take_over.dialog-skip:focus,

#elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-take_over.dialog-skip:focus,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.e-btn-txt.dialog-button.dialog-take_over:focus,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-take_over.dialog-skip:focus,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-take_over.dialog-skip:focus,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.e-primary.dialog-button.dialog-cancel:focus,

.dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-cancel.dialog-submit:focus,

#elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-submit:focus,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.dialog-button.dialog-cancel.dialog-take_over:focus,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.e-primary.dialog-button.dialog-cancel:focus,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-cancel.dialog-submit:focus,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-submit:focus,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.dialog-button.dialog-cancel.dialog-take_over:focus,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.e-btn-txt.dialog-button.dialog-ok:focus,

.dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-ok.dialog-skip:focus,

#elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-ok.dialog-skip:focus,

.dialog-type-confirm .dialog-buttons-wrapper .e-btn.dialog-button.dialog-ok.dialog-cancel:focus,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.e-btn-txt.dialog-button.dialog-ok:focus,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-ok.dialog-skip:focus,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-ok.dialog-skip:focus,

.dialog-type-alert .dialog-buttons-wrapper .e-btn.dialog-button.dialog-ok.dialog-cancel:focus {

background: var(--e-a-bg-primary);

}

.elementor-button.go-pro, .elementor-button.e-accent,

.e-btn.go-pro,

#elementor-deactivate-feedback-modal .go-pro.dialog-skip,

#elementor-deactivate-feedback-modal .go-pro.dialog-submit,

.e-btn.e-accent,

#elementor-deactivate-feedback-modal .e-accent.dialog-skip,

#elementor-deactivate-feedback-modal .e-accent.dialog-submit {

background-color: var(--e-a-btn-bg-accent);

}

.elementor-button.go-pro:hover, .elementor-button.go-pro:focus, .elementor-button.e-accent:hover, .elementor-button.e-accent:focus,

.e-btn.go-pro:hover,

#elementor-deactivate-feedback-modal .go-pro.dialog-skip:hover,

#elementor-deactivate-feedback-modal .go-pro.dialog-submit:hover,

.e-btn.go-pro:focus,

#elementor-deactivate-feedback-modal .go-pro.dialog-skip:focus,

#elementor-deactivate-feedback-modal .go-pro.dialog-submit:focus,

.e-btn.e-accent:hover,

#elementor-deactivate-feedback-modal .e-accent.dialog-skip:hover,

#elementor-deactivate-feedback-modal .e-accent.dialog-submit:hover,

.e-btn.e-accent:focus,

#elementor-deactivate-feedback-modal .e-accent.dialog-skip:focus,

#elementor-deactivate-feedback-modal .e-accent.dialog-submit:focus {

background-color: var(--e-a-btn-bg-accent-hover);

}

.elementor-button.go-pro:active, .elementor-button.e-accent:active,

.e-btn.go-pro:active,

#elementor-deactivate-feedback-modal .go-pro.dialog-skip:active,

#elementor-deactivate-feedback-modal .go-pro.dialog-submit:active,

.e-btn.e-accent:active,

#elementor-deactivate-feedback-modal .e-accent.dialog-skip:active,

#elementor-deactivate-feedback-modal .e-accent.dialog-submit:active {

background-color: var(--e-a-btn-bg-accent-active);

}

.elementor-button.elementor-button-info, .elementor-button.e-info,

.e-btn.elementor-button-info,

#elementor-deactivate-feedback-modal .elementor-button-info.dialog-skip,

#elementor-deactivate-feedback-modal .elementor-button-info.dialog-submit,

.e-btn.e-info,

#elementor-deactivate-feedback-modal .e-info.dialog-skip,

#elementor-deactivate-feedback-modal .e-info.dialog-submit {

background-color: var(--e-a-btn-bg-info);

}

.elementor-button.elementor-button-info:hover, .elementor-button.elementor-button-info:focus, .elementor-button.e-info:hover, .elementor-button.e-info:focus,

.e-btn.elementor-button-info:hover,

#elementor-deactivate-feedback-modal .elementor-button-info.dialog-skip:hover,

#elementor-deactivate-feedback-modal .elementor-button-info.dialog-submit:hover,

.e-btn.elementor-button-info:focus,

#elementor-deactivate-feedback-modal .elementor-button-info.dialog-skip:focus,

#elementor-deactivate-feedback-modal .elementor-button-info.dialog-submit:focus,

.e-btn.e-info:hover,

#elementor-deactivate-feedback-modal .e-info.dialog-skip:hover,

#elementor-deactivate-feedback-modal .e-info.dialog-submit:hover,

.e-btn.e-info:focus,

#elementor-deactivate-feedback-modal .e-info.dialog-skip:focus,

#elementor-deactivate-feedback-modal .e-info.dialog-submit:focus {

background-color: var(--e-a-btn-bg-info-hover);

}

.elementor-button.elementor-button-warning, .elementor-button.e-warning,

.e-btn.elementor-button-warning,

#elementor-deactivate-feedback-modal .elementor-button-warning.dialog-skip,

#elementor-deactivate-feedback-modal .elementor-button-warning.dialog-submit,

.e-btn.e-warning,

#elementor-deactivate-feedback-modal .e-warning.dialog-skip,

#elementor-deactivate-feedback-modal .e-warning.dialog-submit {

background-color: var(--e-a-btn-bg-warning);

}

.elementor-button.elementor-button-warning:hover, .elementor-button.elementor-button-warning:focus, .elementor-button.e-warning:hover, .elementor-button.e-warning:focus,

.e-btn.elementor-button-warning:hover,

#elementor-deactivate-feedback-modal .elementor-button-warning.dialog-skip:hover,

#elementor-deactivate-feedback-modal .elementor-button-warning.dialog-submit:hover,

.e-btn.elementor-button-warning:focus,

#elementor-deactivate-feedback-modal .elementor-button-warning.dialog-skip:focus,

#elementor-deactivate-feedback-modal .elementor-button-warning.dialog-submit:focus,

.e-btn.e-warning:hover,

#elementor-deactivate-feedback-modal .e-warning.dialog-skip:hover,

#elementor-deactivate-feedback-modal .e-warning.dialog-submit:hover,

.e-btn.e-warning:focus,

#elementor-deactivate-feedback-modal .e-warning.dialog-skip:focus,

#elementor-deactivate-feedback-modal .e-warning.dialog-submit:focus {

background-color: var(--e-a-btn-bg-warning-hover);

}

.elementor-button.elementor-button-danger, .elementor-button.e-danger,

.e-btn.elementor-button-danger,

#elementor-deactivate-feedback-modal .elementor-button-danger.dialog-skip,

#elementor-deactivate-feedback-modal .elementor-button-danger.dialog-submit,

.e-btn.e-danger,

#elementor-deactivate-feedback-modal .e-danger.dialog-skip,

#elementor-deactivate-feedback-modal .e-danger.dialog-submit {

background-color: var(--e-a-btn-bg-danger);

}

.elementor-button.elementor-button-danger.color-white, .elementor-button.e-danger.color-white,

.e-btn.elementor-button-danger.color-white,

#elementor-deactivate-feedback-modal .elementor-button-danger.color-white.dialog-skip,

#elementor-deactivate-feedback-modal .elementor-button-danger.color-white.dialog-submit,

.e-btn.e-danger.color-white,

#elementor-deactivate-feedback-modal .e-danger.color-white.dialog-skip,

#elementor-deactivate-feedback-modal .e-danger.color-white.dialog-submit {

color: var(--e-a-color-white);

}

.elementor-button.elementor-button-danger:hover, .elementor-button.elementor-button-danger:focus, .elementor-button.e-danger:hover, .elementor-button.e-danger:focus,

.e-btn.elementor-button-danger:hover,

#elementor-deactivate-feedback-modal .elementor-button-danger.dialog-skip:hover,

#elementor-deactivate-feedback-modal .elementor-button-danger.dialog-submit:hover,

.e-btn.elementor-button-danger:focus,

#elementor-deactivate-feedback-modal .elementor-button-danger.dialog-skip:focus,

#elementor-deactivate-feedback-modal .elementor-button-danger.dialog-submit:focus,

.e-btn.e-danger:hover,

#elementor-deactivate-feedback-modal .e-danger.dialog-skip:hover,

#elementor-deactivate-feedback-modal .e-danger.dialog-submit:hover,

.e-btn.e-danger:focus,

#elementor-deactivate-feedback-modal .e-danger.dialog-skip:focus,

#elementor-deactivate-feedback-modal .e-danger.dialog-submit:focus {

background-color: var(--e-a-btn-bg-danger-hover);

}

.elementor-button i,

.e-btn i,

#elementor-deactivate-feedback-modal .dialog-skip i,

#elementor-deactivate-feedback-modal .dialog-submit i {

margin-inline-end: 5px;

}

#adminmenu #toplevel_page_elementor div.wp-menu-image:before {

content: "\e813";

font-family: eicons;

font-size: 18px;

margin-block-start: 1px;

}

#adminmenu #toplevel_page_elementor a[href="admin.php?page=go_elementor_pro"] {

font-weight: 600;

background-color: #93003f;

color: #ffffff;

margin: 3px 10px 0;

display: block;

text-align: center;

border-radius: 3px;

transition: all 0.3s;

}

#adminmenu #toplevel_page_elementor a[href="admin.php?page=go_elementor_pro"]:hover, #adminmenu #toplevel_page_elementor a[href="admin.php?page=go_elementor_pro"]:focus {

background-color: rgb(198, 0, 84.8571428571);

box-shadow: none;

}

#adminmenu #menu-posts-elementor_library .wp-menu-image:before {

content: "\e8ff";

font-family: eicons;

font-size: 18px;

}

#e-admin-menu__kit-library {

color: #5cb85c;

}

.elementor-plugins-gopro {

color: #93003f;

font-weight: bold;

}

.elementor-plugins-gopro:hover, .elementor-plugins-gopro:focus {

color: rgb(198, 0, 84.8571428571);

}

#elementor-switch-mode {

margin: 15px 0;

}

#elementor-switch-mode-button,

#elementor-editor-button {

outline: none;

cursor: pointer;

}

#elementor-switch-mode-button i,

#elementor-editor-button i {

margin-inline-end: 3px;

font-size: 125%;

font-style: normal;

}

body.elementor-editor-active .elementor-switch-mode-off {

display: none;

}

body.elementor-editor-active #elementor-switch-mode-button {

background-color: #f7f7f7;

color: #555;

border-color: #ccc;

box-shadow: 0 1px 0 #ccc !important;

text-shadow: unset;

}

body.elementor-editor-active #elementor-switch-mode-button:hover {

background-color: #e9e9e9;

}

body.elementor-editor-active #elementor-switch-mode-button:active {

box-shadow: inset 0 1px 0 #ccc;

transform: translateY(1px);

}

body.elementor-editor-active #postdivrich {

display: none !important;

}

body.elementor-editor-active .block-editor-block-list__layout {

display: none;

}

body.elementor-editor-inactive .elementor-switch-mode-on {

display: none;

}

body.elementor-editor-inactive #elementor-editor {

display: none;

}

body.elementor-editor-active .editor-block-list__layout {

display: none;

}

body.elementor-editor-active .edit-post-layout__content .edit-post-visual-editor {

flex-basis: auto;

}

body.elementor-editor-active #elementor-editor {

margin-block-end: 50px;

}

body.elementor-editor-active .edit-post-text-editor__body .editor-post-text-editor {

display: none;

}

body.elementor-editor-active :is(.is-desktop-preview, .is-tablet-preview, .is-mobile-preview) :is(.editor-styles-wrapper, iframe[name=editor-canvas]) {

height: auto !important;

padding: 0 !important;

flex: 0 !important;

}

body .block-editor #elementor-switch-mode {

margin: 0 15px;

}

body .block-editor #elementor-switch-mode .button {

margin: 2px;

height: 33px;

font-size: 13px;

line-height: 1;

}

body .block-editor #elementor-switch-mode .button i {

padding-inline-end: 5px;

}

.elementor-button {

font-size: 13px;

text-decoration: none;

padding: 15px 40px;

}

#elementor-editor {

height: 300px;

width: 100%;

transition: all 0.5s ease;

}

#elementor-editor .elementor-loader-wrapper {

position: absolute;

top: 50%;

left: 50%;

transform: translate(-50%, -50%);

width: 300px;

display: flex;

flex-direction: column;

align-items: center;

gap: 30px;

}

#elementor-editor .elementor-loader {

border-radius: 50%;

padding: 40px;

height: 150px;

width: 150px;

background-color: var(--e-a-bg-active);

box-sizing: border-box;

box-shadow: 2px 2px 20px 4px rgba(0, 0, 0, 0.02);

}

#elementor-editor .elementor-loader-boxes {

height: 100%;

width: 100%;

position: relative;

}

#elementor-editor .elementor-loader-box {

position: absolute;

background-color: var(--e-a-color-txt-hover);

animation: load 1.8s linear infinite;

}

#elementor-editor .elementor-loader-box:nth-of-type(1) {

width: 20%;

height: 100%;

left: 0;

top: 0;

}

#elementor-editor .elementor-loader-box:not(:nth-of-type(1)) {

right: 0;

height: 20%;

width: 60%;

}

#elementor-editor .elementor-loader-box:nth-of-type(2) {

top: 0;

animation-delay: calc(1.8s / 4 * -1);

}

#elementor-editor .elementor-loader-box:nth-of-type(3) {

top: 40%;

animation-delay: calc(1.8s / 4 * -2);

}

#elementor-editor .elementor-loader-box:nth-of-type(4) {

bottom: 0;

animation-delay: calc(1.8s / 4 * -3);

}

#elementor-editor .elementor-loading-title {

color: var(--e-a-color-txt);

text-align: center;

text-transform: uppercase;

letter-spacing: 7px;

text-indent: 7px;

font-size: 10px;

width: 100%;

}

#elementor-go-to-edit-page-link {

height: 100%;

display: flex;

justify-content: center;

align-items: center;

border: 1px solid #DDD;

background-color: #F7F7F7;

text-decoration: none;

position: relative;

font-family: Sans-serif;

}

#elementor-go-to-edit-page-link:hover {

background-color: #ffffff;

}

#elementor-go-to-edit-page-link:focus {

box-shadow: none;

}

#elementor-go-to-edit-page-link.elementor-animate #elementor-editor-button {

display: none;

}

#elementor-go-to-edit-page-link:not(.elementor-animate) .elementor-loader-wrapper {

display: none;

}

.elementor-button-spinner:before {

font: normal 20px/0.5 dashicons;

speak: none;

display: inline-block;

padding: 0;

inset-block-start: 8px;

inset-inline-start: -4px;

position: relative;

vertical-align: top;

content: "\f463";

}

.elementor-button-spinner.loading:before {

animation: rotation 1s infinite linear;

}

.elementor-button-spinner.success:before {

content: "\f147";

color: #46b450;

}

.elementor-blank_state {

padding: 5em 0;

margin: auto;

max-width: 520px;

text-align: center;

font-family: var(--e-a-font-family);

}

.elementor-blank_state i {

font-size: 50px;

}

.elementor-blank_state h3 {

font-size: 32px;

font-weight: 300;

color: inherit;

margin: 20px 0 10px;

line-height: 1.2;

}

.elementor-blank_state p {

font-size: 16px;

font-weight: normal;

margin-block-end: 40px;

}

.elementor-blank_state .elementor-button {

display: inline-block;

}

#available-widgets [class*=elementor-template] .widget-title:before {

content: "\e813";

font-family: eicons;

font-size: 17px;

}

.elementor-settings-form-page {

padding-block-start: 30px;

}

.elementor-settings-form-page:not(.elementor-active) {

display: none;

}

._elementor_settings_update_time {

display: none;

}

#tab-advanced .form-table tr:not(:last-child),

#tab-performance .form-table tr:not(:last-child),

#tab-experiments .form-table tr:not(:last-child) {

border-block-end: 1px solid #dcdcde;

}

#tab-advanced .form-table tr .description,

#tab-performance .form-table tr .description,

#tab-experiments .form-table tr .description {

font-size: 0.9em;

margin: 10px 0;

max-width: 820px;

}

body.post-type-attachment table.media .column-title .media-icon img[src$=".svg"] {

width: 100%;

}

.e-major-update-warning {

margin-block-end: 5px;

max-width: 1000px;

display: flex;

}

.e-major-update-warning__separator {

margin: 15px -12px;

}

.e-major-update-warning__icon {

font-size: 17px;

margin-inline-end: 9px;

margin-inline-start: 2px;

}

.e-major-update-warning__title {

font-weight: 600;

margin-block-end: 10px;

}

.e-major-update-warning + p {

display: none;

}

.notice-success .e-major-update-warning__separator {

border: 1px solid #46b450;

}

.notice-success .e-major-update-warning__icon {

color: #79ba49;

}

.notice-warning .e-major-update-warning__separator {

border: 1px solid #ffb900;

}

.notice-warning .e-major-update-warning__icon {

color: #f56e28;

}

.plugins table.e-compatibility-update-table tr {

background: transparent;

}

.plugins table.e-compatibility-update-table tr th {

font-weight: 600;

}

.plugins table.e-compatibility-update-table tr th, .plugins table.e-compatibility-update-table tr td {

min-width: 250px;

font-size: 13px;

background: transparent;

box-shadow: none;

border: none;

padding-block-start: 5px;

padding-block-end: 5px;

padding-inline-end: 15px;

padding-inline-start: 0;

}

.dialog-widget-content {

background-color: var(--e-a-bg-default);

position: absolute;

border-radius: 3px;

box-shadow: 2px 8px 23px 3px rgba(0, 0, 0, 0.2);

overflow: hidden;

}

.dialog-message {

line-height: 1.5;

box-sizing: border-box;

}

.dialog-close-button {

cursor: pointer;

position: absolute;

margin-block-start: 15px;

inset-inline-end: 15px;

color: var(--e-a-color-txt);

font-size: 15px;

line-height: 1;

transition: var(--e-a-transition-hover);

}

.dialog-close-button:hover {

color: var(--e-a-color-txt-hover);

}

.dialog-prevent-scroll {

overflow: hidden;

max-height: 100vh;

}

.dialog-type-lightbox {

position: fixed;

height: 100%;

width: 100%;

bottom: 0;

left: 0;

background-color: rgba(0, 0, 0, 0.8);

z-index: 9999;

-webkit-user-select: none;

-moz-user-select: none;

user-select: none;

}

.elementor-editor-active .elementor-popup-modal {

background-color: initial;

}

.dialog-type-confirm .dialog-widget-content,

.dialog-type-alert .dialog-widget-content {

margin: auto;

width: 400px;

padding: 20px;

}

.dialog-type-confirm .dialog-header,

.dialog-type-alert .dialog-header {

font-size: 15px;

font-weight: 500;

}

.dialog-type-confirm .dialog-header:after,

.dialog-type-alert .dialog-header:after {

content: "";

display: block;

border-block-end: var(--e-a-border);

padding-block-end: 10px;

margin-block-end: 10px;

margin-inline-start: -20px;

margin-inline-end: -20px;

}

.dialog-type-confirm .dialog-message,

.dialog-type-alert .dialog-message {

min-height: 50px;

}

.dialog-type-confirm .dialog-buttons-wrapper,

.dialog-type-alert .dialog-buttons-wrapper {

padding-block-start: 10px;

display: flex;

justify-content: flex-end;

gap: 15px;

}

.dialog-type-confirm .dialog-buttons-wrapper .dialog-button,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button {

font-size: 12px;

font-weight: 500;

line-height: 1.2;

padding: 8px 16px;

outline: none;

border: none;

border-radius: var(--e-a-border-radius);

background-color: var(--e-a-btn-bg);

color: var(--e-a-btn-color-invert);

transition: var(--e-a-transition-hover);

}

.dialog-type-confirm .dialog-buttons-wrapper .dialog-button:hover,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button:hover {

border: none;

}

.dialog-type-confirm .dialog-buttons-wrapper .dialog-button:hover, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button:focus,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button:hover,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button:focus {

background-color: var(--e-a-btn-bg-hover);

color: var(--e-a-btn-color-invert);

}

.dialog-type-confirm .dialog-buttons-wrapper .dialog-button:active,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button:active {

background-color: var(--e-a-btn-bg-active);

}

.dialog-type-confirm .dialog-buttons-wrapper .dialog-button:not([disabled]),

.dialog-type-alert .dialog-buttons-wrapper .dialog-button:not([disabled]) {

cursor: pointer;

}

.dialog-type-confirm .dialog-buttons-wrapper .dialog-button:disabled,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button:disabled {

background-color: var(--e-a-btn-bg-disabled);

color: var(--e-a-btn-color-disabled);

}

.dialog-type-confirm .dialog-buttons-wrapper .dialog-button:not(.elementor-button-state) .elementor-state-icon,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button:not(.elementor-button-state) .elementor-state-icon {

display: none;

}

.dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-btn-txt, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-skip, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-skip, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-cancel,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-btn-txt,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-skip,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-skip,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-cancel {

background: transparent;

color: var(--e-a-color-txt);

}

.dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-btn-txt:hover, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-skip:hover, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-skip:hover, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-cancel:hover, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-btn-txt:focus, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-skip:focus, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-skip:focus, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-cancel:focus,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-btn-txt:hover,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-skip:hover,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-skip:hover,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-cancel:hover,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-btn-txt:focus,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-skip:focus,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-skip:focus,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-cancel:focus {

background: var(--e-a-bg-hover);

color: var(--e-a-color-txt-hover);

}

.dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-btn-txt:disabled, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-skip:disabled, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-skip:disabled, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-cancel:disabled,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-btn-txt:disabled,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-skip:disabled,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-skip:disabled,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-cancel:disabled {

background: transparent;

color: var(--e-a-color-txt-disabled);

}

.dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-btn-txt-border,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-btn-txt-border {

border: 1px solid var(--e-a-color-txt-muted);

}

.dialog-type-confirm .dialog-buttons-wrapper .dialog-button.elementor-button-success, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-success,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.elementor-button-success,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-success {

background-color: var(--e-a-btn-bg-success);

}

.dialog-type-confirm .dialog-buttons-wrapper .dialog-button.elementor-button-success:hover, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.elementor-button-success:focus, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-success:hover, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-success:focus,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.elementor-button-success:hover,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.elementor-button-success:focus,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-success:hover,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-success:focus {

background-color: var(--e-a-btn-bg-success-hover);

}

.dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-primary, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-submit, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-submit, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-take_over, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-ok,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-primary,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-submit,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-submit,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-take_over,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-ok {

background-color: var(--e-a-btn-bg-primary);

color: var(--e-a-btn-color);

}

.dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-primary:hover, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-submit:hover, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-submit:hover, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-take_over:hover, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-ok:hover, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-primary:focus, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-submit:focus, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-submit:focus, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-take_over:focus, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-ok:focus,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-primary:hover,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-submit:hover,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-submit:hover,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-take_over:hover,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-ok:hover,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-primary:focus,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-submit:focus,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-submit:focus,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-take_over:focus,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-ok:focus {

background-color: var(--e-a-btn-bg-primary-hover);

color: var(--e-a-btn-color);

}

.dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-primary.e-btn-txt, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.e-primary.dialog-skip, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-primary.dialog-skip, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.e-btn-txt.dialog-submit, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-submit.dialog-skip, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-btn-txt.dialog-submit, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-submit.dialog-skip, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-btn-txt.dialog-take_over, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-take_over.dialog-skip, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-take_over.dialog-skip, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-primary.dialog-cancel, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-cancel.dialog-submit, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-submit, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-take_over, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-btn-txt.dialog-ok, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-ok.dialog-skip, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-ok.dialog-skip, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-ok.dialog-cancel,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-primary.e-btn-txt,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.e-primary.dialog-skip,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-primary.dialog-skip,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.e-btn-txt.dialog-submit,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-submit.dialog-skip,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-btn-txt.dialog-submit,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-submit.dialog-skip,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-btn-txt.dialog-take_over,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-take_over.dialog-skip,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-take_over.dialog-skip,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-primary.dialog-cancel,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-cancel.dialog-submit,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-submit,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-take_over,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-btn-txt.dialog-ok,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-ok.dialog-skip,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-ok.dialog-skip,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-ok.dialog-cancel {

background: transparent;

color: var(--e-a-color-primary-bold);

}

.dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-primary.e-btn-txt:hover, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.e-primary.dialog-skip:hover, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-primary.dialog-skip:hover, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.e-btn-txt.dialog-submit:hover, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-submit.dialog-skip:hover, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-btn-txt.dialog-submit:hover, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-submit.dialog-skip:hover, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-btn-txt.dialog-take_over:hover, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-take_over.dialog-skip:hover, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-take_over.dialog-skip:hover, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-primary.dialog-cancel:hover, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-cancel.dialog-submit:hover, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-submit:hover, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-take_over:hover, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-btn-txt.dialog-ok:hover, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-ok.dialog-skip:hover, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-ok.dialog-skip:hover, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-ok.dialog-cancel:hover, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-primary.e-btn-txt:focus, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.e-primary.dialog-skip:focus, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-primary.dialog-skip:focus, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.e-btn-txt.dialog-submit:focus, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-submit.dialog-skip:focus, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-btn-txt.dialog-submit:focus, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-submit.dialog-skip:focus, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-btn-txt.dialog-take_over:focus, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-take_over.dialog-skip:focus, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-take_over.dialog-skip:focus, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-primary.dialog-cancel:focus, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-cancel.dialog-submit:focus, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-submit:focus, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-take_over:focus, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.e-btn-txt.dialog-ok:focus, .dialog-type-confirm .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-ok.dialog-skip:focus, #elementor-deactivate-feedback-modal .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-ok.dialog-skip:focus, .dialog-type-confirm .dialog-buttons-wrapper .dialog-button.dialog-ok.dialog-cancel:focus,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-primary.e-btn-txt:hover,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.e-primary.dialog-skip:hover,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-primary.dialog-skip:hover,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.e-btn-txt.dialog-submit:hover,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-submit.dialog-skip:hover,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-btn-txt.dialog-submit:hover,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-submit.dialog-skip:hover,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-btn-txt.dialog-take_over:hover,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-take_over.dialog-skip:hover,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-take_over.dialog-skip:hover,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-primary.dialog-cancel:hover,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-cancel.dialog-submit:hover,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-submit:hover,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-take_over:hover,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-btn-txt.dialog-ok:hover,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-ok.dialog-skip:hover,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-ok.dialog-skip:hover,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-ok.dialog-cancel:hover,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-primary.e-btn-txt:focus,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.e-primary.dialog-skip:focus,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-primary.dialog-skip:focus,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.e-btn-txt.dialog-submit:focus,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-submit.dialog-skip:focus,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-btn-txt.dialog-submit:focus,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-submit.dialog-skip:focus,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-btn-txt.dialog-take_over:focus,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-take_over.dialog-skip:focus,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-take_over.dialog-skip:focus,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-primary.dialog-cancel:focus,

.dialog-type-alert .dialog-buttons-wrapper #elementor-deactivate-feedback-modal .dialog-button.dialog-cancel.dialog-submit:focus,

#elementor-deactivate-feedback-modal .dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-submit:focus,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.dialog-cancel.dialog-take_over:focus,

.dialog-type-alert .dialog-buttons-wrapper .dialog-button.e-btn-txt.dialog-ok:focus,